Results Display

- Results Display Overview

- Results Tab

- General Results Display Procedures

- Audit Results Display Option

- Report Results Display Option

- History Results Display Option

- HTTP Data List Results Display Option

- HTTP Data Graphs

- Timer List

- SNMP and NT Performance Collector Graphs

Results Display Overview

HTTP/S Load provides a variety of data collection and display options to assist you in the analysis of Test results. Running a Test and displaying the results enables you to identify whether the web application environments under test are able to meet the processing demands you anticipate will be placed on them. After a Test-run is complete use Commander to control which results are displayed and how they are presented, in order to help you analyze the performance of target web applications and the network used to run the Test.

Open the Test you want from the Repository Window and click on the

Results tab in the Test Pane, then choose the results you want to display using the Results Window. Depending on the category of results you select, data is displayed in graph or table format. You can choose from a wide range of tables and customizable graphs to display your results which can be filtered and exported for further analysis and print. Use the Results Window to view multiple graphs and tables simultaneously to compare results from different Test-runs.

When a Test is run a wide range of results data is collected automatically. Virtual User response times and resource utilization information is recorded from all web sites under test, along with performance data from web application components and the Hosts used to run the Test. Results categories include the Test Summary option which presents a brief description of the Test and the Task Groups settings that applied during a Test-run. The Test Audit log records significant events that occur during a Test-run and the HTTP Data List records the HTTP/S requests issued, including the response times and codes for every request. The Timer List option records the length of time taken to load each web page defined in the Scripts referenced by a Test.

Creating and referencing Collectors in a Test helps to improve the quality and extend the range of the results data produced during a Test-run. Collectors give you the ability to target the Host computers and devices used to run a Test and the back-end database components of web applications under test, with user-defined data collection queries. Use NT Performance and SNMP Collectors to collect data from Host devices within target web applications or the test network.

The range of results produced during a Test-run can depend on the content of the Scripts that are referenced by a Test. For example Report and History logs are only produced if the Scripts included have been modeled to incorporate the SCL commands used to generate the data content for these logs.

See also:

History Results Display Option

HTTP Data List Results Display Option

SNMP and NT Performance Collector Graphs

Results Tab

Results are stored in the Repository after a Test-run is complete. You can view them by working from the Repository Window to open the Test you want, then click on the

Results tab in the Test Pane. Use the Results Window to select the results you want to view in the workspace of the Test Pane. You can reposition the Results Window by floating it over the Main Window to give yourself more room for results display, or close it once you have selected the results options you want to view.

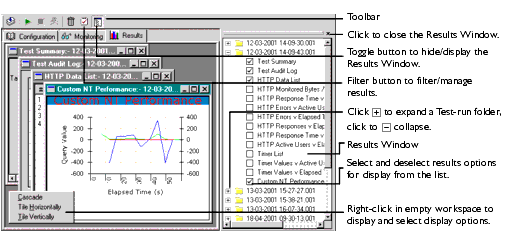

The Results Tab of the Test Pane

Results Tab Display Options

Graphs can be customized to improve the presentation of data by right-clicking within a graph then selecting Customize. This function includes options that enable you to modify the graph style from the default line plot to a vertical bar, as well as controlling the color of elements within the graph display.

Some graphs and tables can be modified by filtering the URL data they represent. Right-click within a graph or table, then select Filter URLs to control the information displayed, or click the Filter button

in the toolbar. You can also opt to export results data for further analysis and printing. Right-click and select Export to Excel or Export from the menu.

You can also zoom in on a graph by clicking and dragging over the area of the graph you want to study. Use the Windows option to control the presentation of results options in the Test Pane, or right-click within the empty workspace of the Test Pane to access these functions as illustrated in the diagram above.

See also:

Customize HTTP Data Graphs Display

The Results Window

When you click on the Results tab, the Results Window opens automatically. Its default location is on the right-hand side of the Test Pane where it is docked. Use it to select and display results from any of the Test-runs associated with the current Test.

Test-runs are stored in date and time stamped folders which you can double-click on to open, or click

. When you open a Test-run folder, the available results are listed below. Display the results you want by clicking on the options and ticking the check boxes to the left of the results options. The results you choose are displayed in the Test Pane.

Multiple graphs and tables from different Test-runs associated with the current Test can be displayed concurrently. Use the Results Window to select additional Test-runs and equivalent results options to compare Test results and help evaluate performance.

Results Window Display Options

The Results Window is located on the right-hand side of the Test Pane. It can be closed to increase the workspace area available, or moved to a new position by floating it over the Main Window.

See also:

Hide/Display The Results Window

Hide/Display The Results Window

- Click

, in the double bar at the top of the Results Window to close it.

- Click

in the toolbar to toggle between hiding and displaying the Results Window.

Move The Results Window

- Click on the double bar at the top of the Results Window.

- Drag, then drop it in the new position within the Main Window.

Resize The Results Window

- Move your cursor over part of the window edge.

- Click and drag, then drop the border in the required position.

General Results Display Procedures

- Display Test Results

- Customize HTTP Data Graphs Display

- Zoom In and Out of a Graph

- Export Test Results

- Close Results Display Options

- Delete Test Results

Display Test Results

- In the Repository Window, double-click

Tests to expand the directory structure.

- Double-click the Test

, whose results you want to display.

- In the Test Pane click the

Results tab.

- In the Results Window, click

next to a Test-run folder or double-click on it to open the folder and view a list of results display options and Task Group results folders.

- Click

next to a results option to display your selection in the Test Pane or open a Task Group folder and select from the display options listed.

- A ticked check box to the left of a results option indicates that it is open in the Test Pane.

- Note: Click

, in the title bar of a graph or table to close it or deselect the results option in the Results Window by clicking on the option.

- Tip: All available results have display and output options associated with them. These options may include filtering, customizing and exporting. Right-click within a graph or table to display and select from the choices available.

- Use the Windows option in the Menu Bar to control the display of graphs and tables. Or, right-click within the empty workspace of the Test Pane to access these functions.

Customize HTTP Data Graphs Display

- Open a Test and click the

Results tab in the Test Pane.

- In the Results Window, double-click on a Test-run folder or click

, to open it and display the available results.

- Click on a graph display, results option to open your selection in the Test Pane.

- Right-click inside the graph and select Customize.

- Select the Graph Type you want:

- Line plot: A single line connecting values.

- Vertical bars: A single, solid vertical bar per value.

- Area under points: The area beneath the line plot is filled.

Zoom In and Out of a Graph

- Open a Test and click the

Results tab of the Test Pane.

- In the Results Window, click

next to a Test-run folder or double-click on it to open the folder and view a list of results display options and Task Group results folders.

- Click

next to a graph option to display your selection in the Test Pane.

- Click and drag over the area of the graph you want to zoom in on and release your mouse button.

- Double-click anywhere in the graph to zoom out and return to the full graph display.

Export Test Results

- Open a Test and click the

Results tab of the Test Pane.

- In the Results Window, click

next to a Test-run folder or double-click on it to open the folder and view a list of results display options and Task Group results folders.

- Click

next to a results option to display your selection in the Test Pane.

- Right-click inside the graph or table and select either Export to Excel (graphs), or Export (tables and lists).

- Note: The Export to Excel option automatically launches Excel and converts the data into Microsoft Excel Workbook format. Save and edit your results as required.

- The Export option enables you to export results as a .CSV file. The Test Summary results option only supports text file format for exporting data.

Close Results Display Options

- Click

, in the title bar of a graph or table to close it.

- Use the Results Window to close a graph or table by clicking on the results option and unchecking the check box to the left of the option.

- Open a different Test or a Collector from the Repository Window.

Delete Test Results

- Open a Test and click the

Results tab of the Test Pane.

- Click

, in the toolbar.

- In the Delete Test-runs dialog box, select the Test-runs you want to delete.

- Click Delete to remove the results from the Repository.

Audit Results Display Option

The Audit log contains a list of significant events that have occurred during a Test-run. Including the times and details of Test initiation and completion, errors that may have occurred and Virtual User details.

Additional Audit log entries may be written to the log if the Scripts included in the Test have been modeled to incorporate the appropriate SCL code. Use the LOG SCL command in a Script, to generate the data content for the Test Audit log. For more information on SCL refer to the SCL Reference Guide; an on-line copy is available within the Script Modeler, Help menu.

See also:

Display Audit Data

- Open a Test and click the

Results tab in the Test Pane.

- In the Results Window, double-click on a Test-run folder or click

, to open it and display the available results.

- Click the Test Audit log results option in the list.

Report Results Display Option

The Report log is a sequential text file that is used to record information about a single Test-run. Usually, a single record is written to the Report log whenever a Test case passes or fails.

Additional Report log entries may be written to the log if the Scripts included in the Test have been modeled to incorporate the appropriate SCL code. Use the REPORT SCL command in a Script, to generate the data content for the Test Report log. For more information on SCL refer to the SCL Reference Guide; an on-line copy is available within the Script Modeler, Help menu.

See also:

Display Report Data

- Open a Test and click the

Results tab in the Test Pane.

- In the Results Window, double-click on a Test-run folder or click

, to open it and display the available results.

- Click the Test Report log results option in the list.

History Results Display Option

The History log is a sequential text file that is used to maintain a chronological history of each occasion on which the Test was run, together with the results of that Test. Usually, a single record is written to the History log when the Test-run is complete.

In addition, further Test History log entries may be written to the log if the Scripts included in the Test have been modeled to incorporate the appropriate SCL code. Use the HISTORY SCL command in a Script, to generate the data content for the Test History log. For more information on SCL refer to the SCL Reference Guide; an on-line copy is available within the Script Modeler, Help menu.

See also:

Display History Data

- Open a Test and click the

Results tab in the Test Pane.

- In the Results Window, double-click on a Test-run folder or click

, to open it and display the available results.

- Click the Test History log results option in the list.

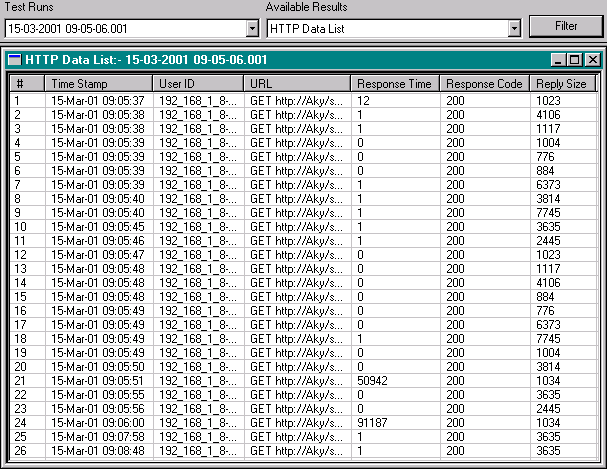

HTTP Data List Results Display Option

The HTTP Data List file gives details of the HTTP requests issued by Scripts during a Test-run. The information stored includes the response times and codes for the HTTP requests issued during a Test. The quality of the HTTP data recorded depends on the level of Server Statistics you specified when you created the Test and defined the properties to be applied. The Server Statistics setting controls the number of Virtual Users that the statistics are gathered for and can be edited in the Test Definition section.

The information collected is presented in a table and can be sorted by clicking on the column headings to reverse the display order of the data entries. These results can also be filtered to show only the entries you want by clicking inside the table and selecting the Filter option.

HTTP Data List can be exported to a .CSV text file which allows them to be imported into many other data analysis and report generating tools.

See also:

Display the HTTP Data List

- Open a Test and click the

Results tab in the Test Pane.

- In the Results Window, double-click on a Test-run folder or click

, to open it and display the available results.

- Click the HTTP Data List results option in the list to open it in the Test Pane.

- HTTP Data List information is displayed in table format:

- Click the Filter button when it becomes active to control the results displayed. For more information, see Filter HTTP Data List Results.

- Right-click within the table and select Export to convert the data into a .CSV text file for use in other packages. For more information, see Export Test Results. Tip: Display multiple graphs and tables concurrently to compare results using the Results Window.

- Note: Click

, in the title bar to close it.

See also:

Filter HTTP Data List Results

- Open a Test and click the

Results tab in the Test Pane.

- In the Results Window, double-click on a Test-run folder or click

, to open it and display the available results.

- Click on a graph display, results option to open your selection in the Test Pane.

- Click

, in the toolbar or right-click inside the graph and select Filter URLs.

- The filter criteria available correspond to the column categories in the HTTP Data List table. Select your the settings from the filter options:

- Time: Date and time HTTP GETs and POSTs were issued.

- Response Time: Web site response time to GETs in milliseconds.

- Response Code: Code issued by web site in response to GETs.

- Reply Size: Size of data response to GETs issued by web site in bytes.

- URLs: Filter by URL.

- User ID: Filter by Virtual User(s) identity (IP address).

See also:

HTTP Data Graphs

The volume of HTTP data recorded is controlled by the logging level you set for a Task Group's Virtual Users. The logging level determines the number of Virtual Users that data is collected for and controls the quality of the data displayed in the graphs. The HTTP data collected relates only to responses to HTTP requests issued as part of Test.

The HTTP data collected during a Test-run can be displayed in a number of different graphs where you can scrutinize your Test results. There are seven graphs in total which you can display using the Results Window.

Right-click within a graph and select to Customize, Export to Excel Filter URLs.

See also:

HTTP Response Time (Average per Second) v Number of Responses Graph

HTTP Errors v Active Users Graph

HTTP Errors v Elapsed Time Graph

HTTP Responses v Elapsed Time Graph

HTTP Response Time v Elapsed Time Graph

HTTP Active User v Elapsed Time Graph

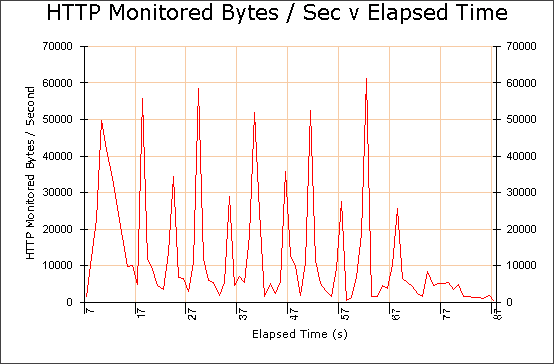

Display HTTP Data Graphs

- Open a Test and click the

Results tab in the Test Pane.

- In the Results Window, double-click on a Test-run folder or click

, to open it and display the available results.

- Click on an HTTP data list option such as HTTP Monitored Bytes / Second v Elapsed Time to open your selection in the Test Pane.

- This graph shows the total number of bytes per second returned during the Test-run.

- Click the Filter button when it becomes active to control the results displayed or right-click inside the graph and select Filter URLs.

- Note: Graphs are displayed in the default line plot style. Right-click within a graph and select Customize from menu to change their appearance.

Right-click within the graph and select Export to Excel convert the dataNote: Click, in the title bar to close it.

HTTP Response Time (Average per Second) v Number of Responses Graph

This graph displays the average response time for requests grouped by the number of requests per second during a Test-run.

Use the Filter button when it becomes active to control the results displayed. Alternatively, right-click within the graph and select the Filter URLs option.

Other right-click menu options include Export to Excel Customize option.

HTTP Errors v Active Users Graph

This graph is used to display the effect on performance measured by the number of HTTP server errors returned as the number of active Virtual Users varies during a Test-run.

Note: This graph has been customized to display data points as vertical bars. Right-click within a graph and select Customize, then select Graph Type Vertical bars.

Make use of the URL Filter and Export to Excel options associated with this graph by right-click within it.

HTTP Errors v Elapsed Time Graph

This graph displays a cumulative count of the number of HTTP server errors returned during the Test-run.

Note: This graph has been customized to display the area under the data points as a solid. Right-click within a graph and select Customize > Area under points from menu to change the appearance of your graphs.

HTTP Responses v Elapsed Time Graph

This graph displays the total number of HTTP responses per second during the Test-run.

Right-click within a graph and select to Customize, Export to Excel Filter URLs.

HTTP Response Time v Elapsed Time Graph

This graph displays the average response time per second of all the requests issued during the Test-run.

Use the right-click menu options to Customize, Export to Excel Filter URLs.

HTTP Active User v Elapsed Time Graph

This graph displays the total number of active Virtual Users sampled at fixed intervals during a Test-run.

Right-click within a graph and select to Customize, Export to Excel Filter URLs.

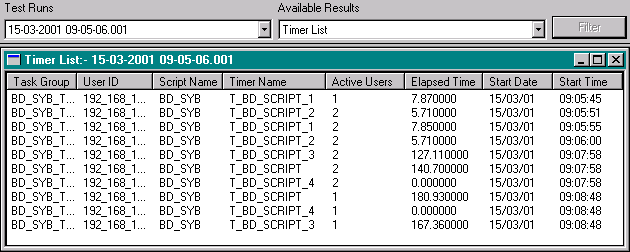

Timer List

The Timer List file gives details of the Timers recorded during a Test-run. Timer results data records the time taken to load each web page specified by a Script for every Virtual User running the Script during a Test-run. The level of Timer information recorded is controlled by adjusting the Virtual User settings in the Test's Script-based Task Groups. Open the Test with the Configuration tab of the Test Pane displayed, then click on a VUs table cell in a Task Group and check the activate the Generate Timers for each page option in the Properties Window. The logging level you select here controls the volume of HTTP data and the number of timers recorded.

The information collected is presented in a table and can be sorted by clicking on the column headings to reverse the display order of the data entries.

Timer List can be exported to a .CSV text file which allows results to be imported into many other data analysis and report generating tools.

See also:

Timer Values v Active Users Graph

Timer Values v Elapsed Time Graph

Display the Timer List

- Open a Test and click the

Results tab in the Test Pane.

- In the Results Window, double-click on a Test-run folder or click

, to open it and display the available results.

- Click the Timer List results option to open your selection in the Test Pane.

- Timer List information is displayed in table format:

- Note: Right-click within the graph and select Export to save the data to a .CSV text file, which allows results to be imported into other data analysis and report generating tools.

- Tip: Display multiple graphs and tables concurrently to compare results using the Results Window.

- Note: Click

, in the title bar to close it.

Timer Values v Active Users Graph

This graph is used to display the effect on performance as measured by timers, as the number of Virtual Users varies.

This graph enables you to choose which timers you want to display. A dialog box appears when you select this option. Use it to choose the timers you want to view, then click OK to proceed.

Right-click within a graph and select to Customize, Export to Excel Filter URLs.

Timer Values v Elapsed Time Graph

This graph is used to display the average timer values per second.

This graph enables you to choose which timers you want to display. A dialog box appears when you select this option. Use it to choose the timers you want to view, then click OK to proceed.

Right-click within a graph and select to Customize, Export to Excel Filter URLs.

SNMP and NT Performance Collector Graphs

The data collection queries defined in a Collector generate results data that can be displayed in custom graphs. All NT Performance Collector data is displayed in the Custom NT Performance graph. All SNMP Collector data is displayed in the Custom SNMP graph.

If you have more than one Collector of the same type referenced in a Test, all the results collected are merged and displayed in one graph.

Use the Filter option to select and display specific data collection queries defined in the Collectors. The unique names you assigned to each query are displayed below the graph in a color coded key. The IP address of the Host used to run the Collector Task during a Test-run is automatically listed alongside the query name.

Right-click within a graph and select to Customize, Export to Excel Filter.

See Also:

Display Custom Collector Graphs

Display Custom Collector Graphs

- Open a Test and click the

Results tab in the Test Pane.

- In the Results Window, double-click on a Test-run folder or click

, to open it and display the available results.

- Click the Custom NT or Custom SNMP from the list results option to open your selection in the Test Pane.

Custom SNMP Graph

The data collection queries as defined in the Collectors referenced by a Test, are color coded for easy identification. Each query displays the IP address of the Host targeted during a Test- run.

|

OpenSTA.org Mailing Lists Further enquiries Documentation feedback CYRANO.com |